Retrieval Augmented Generation (RAG) is one of the most popular techniques to improve the accuracy and reliability of Large Language Models (LLMs). This is possible by providing additional information from external data sources. This way, the answers can be tailored to specific contexts and updated without fine-tuning and retraining.

This blog post will help you understand how RAG systems work and how can they help you improve your work.

Understanding Retrieval Augmented Generation Systems

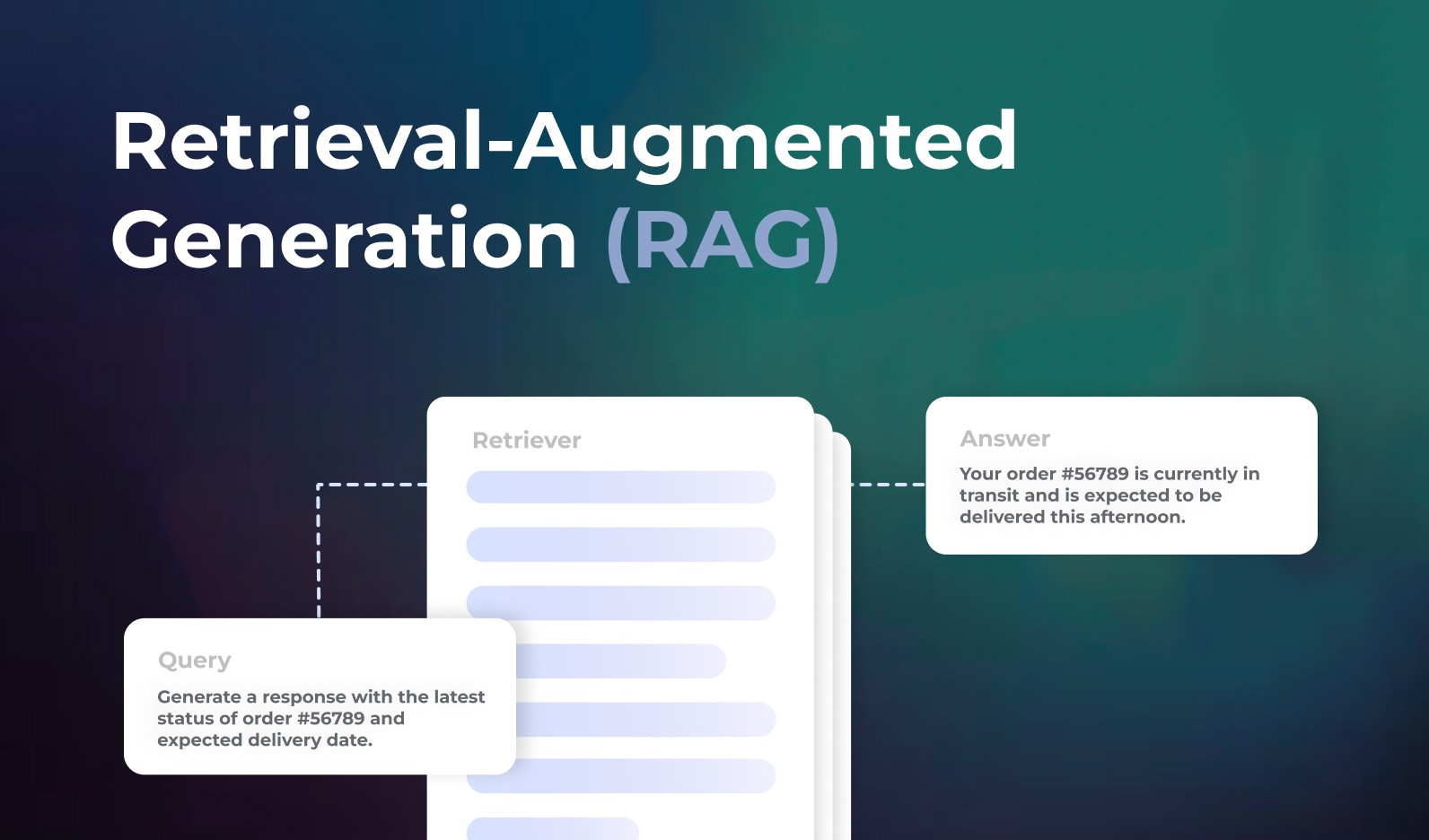

Retrieval augmented generation systems are created to make the responses they generate better and more relevant. These systems work in two steps: first, they find and gather useful information from a knowledge base, and then they use that information to generate a response.

By doing this, the system ensures that the response is based on real-world knowledge, which makes it more accurate and reliable. In recent years, retrieval augmented generation systems have shown promising results in different tasks like answering questions, creating dialogue systems, and summarizing information.

However, it’s important to find the right amount of information to retrieve at a time, as this greatly affects the system’s performance optimization.

What is the Need for RAG?

The development of RAG is a direct response to the limitations of Large Language Models (LLMs) like GPT.

While LLMs have shown impressive text generation capabilities, they often struggle to provide contextually relevant responses, hindering their utility in practical applications.RAG, on the other hand, aims to solve this problem by focusing on understanding what users want and providing meaningful and relevant replies that fit the conversation’s context.

Benefits of using RAG

Retrieval augmented generation brings several benefits to enterprises looking to employ generative models while addressing many of the challenges of large language models.

Improved Visual Communication

RAG provides a simple and visual way to communicate the status of projects, tasks, or any other items. The color-coded system allows for quick information processing and understanding at a glance. It helps team members, stakeholders, and managers easily identify the progress or status of different elements.

Enhanced Decision-Making

RAG facilitates better decision-making by providing a clear indication of the status of various items. The color-coded system helps prioritize tasks or projects based on their urgency or progress. This allows managers to allocate resources effectively and make informed decisions about where to focus their efforts.

Increased Accountability

It promotes accountability within teams and individuals. By assigning colors to different items, it becomes evident who is responsible for each task and how well it is progressing. This encourages individuals to take ownership of their work and motivates them to meet deadlines and achieve targets.

Efficient Resource Allocation

RAG assists in optimizing resource allocation. By identifying items marked as “Red” or “Amber,” which indicate issues or delays, teams can quickly address those areas that require immediate attention. This prevents unnecessary resource allocation to items that are progressing well and ensures that resources are effectively utilized where they are most needed.

Streamlined Progress Tracking

RAG simplifies progress tracking by providing a standardized system for monitoring and reporting. It allows for consistent updates on the status of different items, making it easier to track progress over time. This helps in identifying bottlenecks, addressing delays, and keeping stakeholders informed about the overall progress of a project or task.

Efficient Communication and Collaboration

RAG serves as a common language for communication within teams and across stakeholders. It eliminates ambiguity and facilitates effective communication by providing a visual representation of the status of various items. This promotes collaboration, as team members can quickly understand the current state of affairs and work together to address any challenges.

Implementing Retrieval Augmented Generation (RAG) in Your Work

Retrieval Augmented Generation (RAG) is a powerful approach that combines information retrieval and text generation to improve the quality and relevance of generated responses. Here are key steps to effectively implement RAG in your work:

Understand RAG Fundamentals

Familiarize yourself with the basics of RAG and how it works. RAG involves a two-step process: retrieving relevant information from a knowledge base and then using that information to generate a response. This combination ensures that the generated response is grounded in real-world knowledge, making it more informed and accurate.

Define Your Knowledge Base

Identify the knowledge base or information sources that are relevant to your work. This could include internal documents, databases, websites, or any other repositories of information. Determine the scope and coverage of your knowledge base to ensure it aligns with your specific needs and the type of responses you aim to generate.

Select Suitable Retrieval Methods

Choose appropriate retrieval methods to extract information from your knowledge base. This could involve keyword-based search, semantic matching, or more advanced techniques such as neural retrieval models. Consider the nature of your work and the complexity of the information you need to retrieve when selecting the most suitable retrieval methods.

Train or Fine-Tune Your RAG Model

Depending on your requirements, you may need to train or fine-tune a RAG model using your specific data and knowledge base. This step involves leveraging existing pre-trained language models, such as GPT, and adapting them to your specific domain or context. Training or fine-tuning ensures that your RAG model understands and generates responses that are relevant to your work.

Develop an Integration Strategy

Determine how you will integrate RAG into your existing workflows or systems. This could involve developing APIs, building custom applications, or utilizing existing frameworks that support RAG. Consider the technical requirements, scalability, and compatibility with your current infrastructure when designing your integration strategy.

Evaluate and Refine Performance

Continuously evaluate the performance of your RAG implementation. Monitor the quality and relevance of the generated responses and gather feedback from users or stakeholders. Use metrics such as precision, recall, or user satisfaction to measure the effectiveness of your RAG system. Identify areas for improvement and refine your implementation iteratively.

Ensure Ethical and Responsible Use

When implementing RAG, be mindful of ethical considerations and responsible use of generated content. Ensure that the information retrieved and generated aligns with legal and ethical guidelines. Regularly review and update the knowledge base to maintain accuracy and relevance, and implement safeguards to prevent the propagation of misleading or harmful information.

Provide User Support and Training

Offer user support and training to those who will interact with the RAG system. Provide documentation, guidelines, or training sessions to help users understand how to effectively use and interact with the generated responses. Address any questions or concerns they may have and encourage feedback to continually improve the user experience.

10 Ways to Improve the Performance of Retrieval Augmented Generation

Here are 10 ways to improve the performance of retrieval augmented generation (RAG):

Clean your data

Ensure that your data is organized logically and does not have conflicting or redundant information, as it affects the retrieval and generation steps of RAG.

Explore different index types

Consider using keyword-based search or a hybrid approach with embeddings based on your specific use case.

Experiment with your chunking approach

Find the optimal size for dividing the context data into chunks, considering the trade-off between retrieval and generation quality.

Play around with your base prompt

Customize the instructions given to the language model (LLM) to guide its behavior and improve the quality of generated responses.

Try metadata filtering

Add metadata tags to your data, such as dates, to prioritize recent information during retrieval.

Use query routing

Employ multiple indexes and direct queries to the most appropriate index based on their characteristics or types.

Look into reranking

Reorder retrieved results based on relevance rather than just similarity, using techniques like reranking.

Consider query transformations

Alter the user’s query by rephrasing, using hypothetical responses, or breaking down complex queries into multiple questions.

Fine-tune your embedding model

Customize the pre-trained embedding model to align better with the domain-specific terms and improve retrieval metrics.

Start using LLM dev tools

Utilize debugging tools provided by frameworks like LlamaIndex or LangChain, and explore additional tools in the ecosystem for in-depth analysis of your RAG system.

What are the Diverse Approaches in RAG

RAG offers a spectrum of approaches for the retrieval mechanism, catering to various needs and scenarios:

Simple

This approach retrieves relevant documents and seamlessly incorporates them into the generation process. It ensures that the responses are comprehensive by considering a wide range of information sources.

Map Reduce

With this approach, responses generated for each document are combined to create the final response. It synthesizes insights from multiple sources to provide a well-rounded answer.

Map Refine

The Map Refine approach involves iteratively refining responses using initial and subsequent documents. This continuous improvement process enhances the quality of the responses over time.

Map Rerank

In this approach, responses are ranked, and the highest-ranked response is selected as the final answer. The focus is on prioritizing accuracy and relevance in the generated responses.

Filtering

Advanced models are used to filter documents, resulting in a refined set of information that serves as the context for generating more focused and contextually relevant responses.

Contextual Compression

This approach involves extracting pertinent snippets from documents to generate concise and informative responses. It helps minimize information overload while still providing relevant information.

Summary-Based Index

By leveraging document summaries, this approach indexes document snippets and generates responses using relevant summaries and snippets. It ensures that the answers are concise yet informative.

Forward-Looking Active Retrieval Augmented Generation (FLARE)

FLARE predicts forthcoming sentences by initially retrieving relevant documents and then iteratively refining the responses. It ensures a dynamic and contextually aligned generation process.

Conclusion

Retrieval-augmented generation represents a significant leap forward in the field of natural language processing. Its ability to integrate external knowledge into language generation opens up many possibilities across various sectors. As the technology continues to evolve, it holds the promise of greatly enhancing the efficiency and effectiveness of AI-driven communication and information processing.

By utilizing RAG’s diverse methodologies, companies such as Vectorize.io can improve their response quality, deliver thorough and context-sensitive answers, and reduce information overload. The capability to merge insights from varied sources, prioritize responses based on relevance and precision, and employ sophisticated models for filtering and contextual compression allows users to access the most pertinent and succinct information. This integration significantly enhances Vectorize.io’s ability to serve its users effectively.