TL;DR:

- A lot of startups fail because of bad tech choices (around 35%).

- If your tech can’t bend, scale, or stay safe, you’re toast.

- Sometimes no market need really means our tech sucked.

- Loads of dead startups leave clues about what breaks when things get big.

- This tells you 7 big mistakes; also, when to call in the tech pros.

How Bad Tech Ruins Startups

Tech decisions don’t just mess with code; they mess with your money, timing, and how people use your product.

| What’s Broken? | How Tech Makes It Worse | How Bad Is It? |

| Can’t change fast enough | Messy code = 3x longer to change | Your rival ships in 2 months; you need 6! |

| Burning cash like crazy | No auto-scale = wasting tons of server power | Burning $500K+/year on nothing |

| Can’t add new stuff | Old tech = 2x slower | Losing big deals worth $100K–$2M a year |

| People hate using your thing | Slow code = losing users | Losing money every month |

Real Talk: Jawbone bit the dust in 2017 because their tech couldn’t handle a million devices. People lost data, ditched them for Fitbit, and the company died even after raising $900M.

Mistake #1: The Tech That Won’t Change

How It Goes Down: Code gets so tangled that changing one thing breaks a bunch of others. You go from updating stuff all the time to barely ever.

Real Talk: Fyre Festival’s website worked fine… until 100,000 people tried to buy tickets at once. The system crashed because it couldn’t handle the load.

When This Kills Startups:

- Updates take forever and need everyone to help.

- Changing anything means touching a million files.

- Your senior people refuse to touch certain code.

- Your Amazon bill doubled, but traffic only went up a little.

- New people take ages to get up to speed.

The Fix (No Need to Go Overboard):

You don’t need the fanciest setup right away. Just get the right tech for where you’re at.

When to Break It Apart:

- You have 8+ people working on the same code.

- You update less than once a day.

- Changing one thing breaks everything.

How to Do It:

- Small teams: slowly pull things out over time (6-12 months).

- Big teams: build new stuff alongside the old (12-18 months).

What to Expect:

- Update way more often (5-10x).

- Fix stuff way faster (half the time or less).

- Tech costs might go up a bit at first, then level out.

Real Win:

Etsy broke up their big system over 18 months. They started updating 10x faster, had no downtime for customers, and got new stuff out quicker, which helped them stay ahead.

Mistake #2: Forgetting to Think About Scale

How It Goes Down: You pick the easiest tech without thinking about the future. We’ll scale later turns into We’re down during our busiest day!

Real Talk: When Clubhouse blew up, their system couldn’t handle it. People had to wait forever to do basic stuff. They lost users to other apps.

When You’ll Feel the Pain:

| What You Do | What You Should Try | Why Bother? | Who Does This? |

| User timelines | Break it up by user | Handles way more | |

| Sensor data | Use a time-focused setup | Super fast saves | Particle.io |

| Orders | Break it up by location | Go global easier | Shopify |

| Social graphs | Special graph setups | Fast connections |

When to Worry About Scale:

- Early days: Use simple, managed setups that scale automatically. Cost: Cheap. Focus: Making sure people want your product.

- Growing: Add some quick tricks like caching to buy time before you need big changes. Cost: A bit more. Focus: Getting ready for real growth.

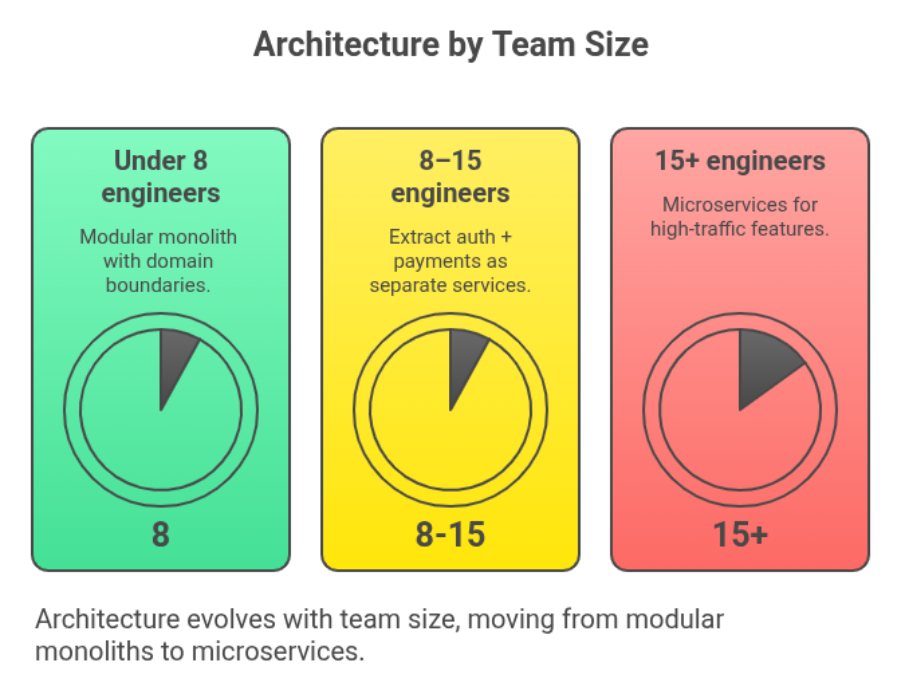

- Big: Break things up, maybe use microservices for the busy parts. Cost: A lot. Focus: Keeping everything online so you don’t lose money.

Mistake #3: Thinking Security is Optional

How It Goes Down: We’ll add security later becomes a huge data breach. That ruins trust and costs a fortune. Hackers like easy targets.

Real Talk: Paytm got hacked because their security was weak. Attackers stole payment info. They had to fix it in a panic while dealing with angry customers and the press.

Security Math:

- Breach hurts: $150K–$500K (fixing it, paying people back, losing deals)

- Security helps: $5K–$20K (doing it right from the start)

- Good investment: Saves you 7.5x–25x in the long run

- No security = no big deals: Big companies need proof you’re safe

Security Checklist:

- Starting out: Use simple stuff to protect passwords and data. It takes a few days and costs almost nothing.

- Growing: Add more layers to block attacks and keep people in their place. This takes a few weeks and costs a bit more.

- Big: Go all-in on security, hire experts, and prove you’re safe. This takes months and costs a lot.

Mistake #4: Flying Blind

How It Goes Down: Something breaks, but you have no idea why. It takes forever to fix (hours instead of minutes), costs a ton in wasted time, and makes users leave.

Real Talk: When Koo blew up in India, half the site broke. Engineers spent hours guessing what was wrong because they had no monitoring. People left for other sites.

What You Can’t See:

- What’s slow for users

- Where errors happen

- Who’s having problems

- Why your Amazon bill is crazy

- What part of the site is about to crash

- How long database searches take

- How many people sign up

- How many people pay

- How people use each part of your site

Basic Monitoring Setup:

- Logs: record everything

- Metrics: track performance

- Tracing: follow requests

Cost:

- Do it yourself: Cheap

- Pay someone else: More expensive

- Enterprise: Pricey, but needed for big companies

What to Track:

- How fast the site is

- How often errors happen

- How people use the important stuff

- How many people sign up and pay

- How healthy your servers are

Mistake #5: Tech That Can’t Bend

How It Goes Down: Your tech is so rigid that changing anything is a nightmare. You lose out to rivals who can move faster.

| What’s Broken? | Initial Architecture | Pivot Required | Implementation | Outcome |

| Dropbox | AWS services | Add file sharing | 6 weeks | Scaled to millions |

| Secret App | Monolith | Add message saves | 8 months | Shut down |

Cost of Being Flexible:

- Takes a bit longer at first (15-20%)

- But saves you a ton of time when you need to change

Checklist for Being Flexible:

- Organize code by what it does (users, payments)

- Record what happened, not just what is

- Build internal tools like APIs

- Turn features on/off without deploying code

- Update your database carefully

Mistake #6: Doing Too Much Too Soon

How It Goes Down: You read tech blogs and try to build the fanciest system from day one. This wastes time, money, and makes things way too complicated.

Cost of Overdoing It:

- Takes way longer to build

- Makes your system way more complicated

- Distracts your team

- Burns through cash

Right Tech for the Right Stage:

- Early days: Keep it simple.

- Growing: Add some modularity.

- Big: Break things up into microservices for the busy parts.

Cost Comparison:

| Architecture | Cost | Scale | Work | When to Switch |

| Serverless | Cheap | Handles most | Easy | Series A |

| Containers | Medium | Handles more | Medium | Series B |

| Kubernetes | Pricey | Handles everything | Hard | Dedicated DevOps |

Mistake #7: No Idea Where Your Money Is Going

How It Goes Down: Your Amazon bill skyrockets, but you have no idea why. No tracking, no alerts, no responsibility.

Where the Money Goes:

- Wasting database power

- Bad database searches

- No caching

- Leaving servers on all the time

- Serving files from your own servers instead of using a CDN

How to Fix It:

- Start tracking costs

- Tag everything

- Set up alerts

- Check your database sizes

- Add caching

- Move files to a CDN

- Turn off unused servers

Real Win:

Brex cut their server costs by 40% by getting organized.

How to Pick Tech Like a Pro:

- Where are you at? (Just starting? Growing? Can you afford to spend time on tech?)

- What can your team handle? (Do you have the skills? Can you hire people?)

- How big are you now? (Don’t plan for millions of users if you have ten.)

- How much does it cost? (Servers, time, everything.)

- Can you change if you need to? (What if you need a new feature? New database?)

Red Flags (Call a Pro):

- Your server bill doubled, but traffic didn’t.

- Updates take forever.

- Adding new stuff takes ages.

- The site is slow.

- Your team is scared to touch the code.

- You’re getting big, but your tech is still basic.

- Big clients are asking about security.

In Conclusion:

Pick the right tech for where you’re at.

Next Steps:

- Starting out? Download our checklist.

- Growing? Check your tech health.

- In trouble? Get a free consultation.

We Can Help!

We’ve scaled systems handling 100M+ requests per day

Here is what we provide:

- Architecture Audits- 1 week assessment (Free for qualified startups)

- MVP Development-8-12 weeks

- Scale Consulting- 3-12 months

- Migration Support- 3-9 months

Our Solution (4-Month Engagement):

- Implemented Redis caching layer (reduced database load 70%)

- Optimized N+1 queries (identified via observability)

- Migrated to auto-scaling infrastructure

- Implemented security controls for SOC 2 readiness

Outcomes:

- Infrastructure costs: $6K/month (60% reduction)

- API response times: 120ms average (85% improvement)

- Deploy frequency: 8 times per day (CI/CD pipeline)

- Security: SOC 2 Type II certified in 6 months

- Business impact: Closed $2M ARR in enterprise deals previously blocked

What’s your biggest Tech challenge right now? Let us know, we will respond in a specific and actionable way.