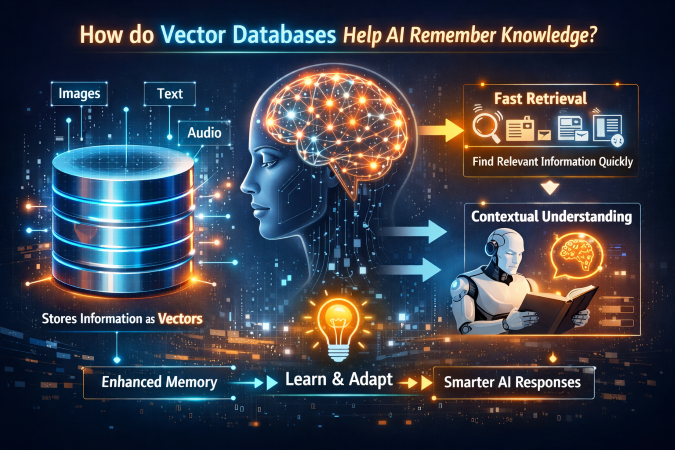

Artificial intelligence systems work with large amounts of information. But knowing information is not the same as remembering it. Memory in AI is not natural. Models do not recall past data unless a system is built around them. This is where vector databases come in. They give AI a structured way to store and recall knowledge based on meaning, not exact words. This memory design is a core technical topic in advanced learning paths like Generative Ai Course in Hyderabad, where AI systems are built to handle long-term knowledge without slowing down or losing accuracy. The focus is not storage size. The focus is how memory is searched and reused.

Vector databases change how AI thinks about information. They do not store sentences as text. They store meaning as numbers. This allows AI to find related ideas even when the words are different.

Why Does AI Need a Different Kind of Memory?

Traditional databases work well for fixed data. They store rows, columns, and keys. AI systems do not work with fixed meaning. A question can be asked in many ways but still mean the same thing. Normal databases fail in such cases.

AI models also have limits:

- They forget older conversation data

- They cannot hold large documents in memory

- They repeat answers when context is lost

- They guess when knowledge is missing

Vector databases solve these problems by acting as external memory. Instead of forcing the model to remember everything, memory is stored outside and pulled only when needed.

In simple terms, AI does not look for exact matches. It looks for closeness in meaning. Vector databases are built for this exact job.

In cities like Bangalore, AI teams deal with large developer platforms and product data that changes daily. Training programs such as Gen Ai Course in Bangalore now focus on memory systems that allow AI tools to stay updated without model retraining. This shift is driven by real system limits, not theory.

How Vector Databases Store Meaning?

Vector databases store embeddings. An embedding is a list of numbers that represent meaning. When text, images, or code are passed through an AI model, the output is not words. It is a vector.

Each vector sits in a high-dimensional space. Similar ideas sit close together. Different ideas sit far apart.

Key technical steps:

- Input data is converted into vectors

- Vectors are normalized for accuracy

- Indexes are created for fast search

- Distance is measured mathematically

When a user asks a question, the question is also turned into a vector. The database finds nearby vectors. These are treated as remembered knowledge.

This process is fast and scalable. It does not depend on fixed keywords. It depends on similarity.

In Noida, enterprise AI systems rely on internal documents, policies, and workflow data. Programs like Generative AI Course in Noida now emphasize vector-based memory because companies need AI that understands internal language and context, not public data patterns.

Retrieval-Based Memory in AI Systems:

Modern AI systems do not answer directly from the model. They first retrieve information. This method is called retrieval-based generation.

The flow works like this:

- A query is converted into a vector

- Related data is fetched from the vector database

- The data is added to the model input

- The model generates a grounded response

This makes answers more accurate. It also reduces false output. The model does not invent data. It uses retrieved memory.

Technical benefits:

- Memory can be updated anytime

- Sensitive data stays outside the model

- Models remain smaller and faster

- Answers stay linked to real data

This design is now standard in advanced AI systems. It is no longer optional.

In Bangalore, startups building AI assistants for coding and analytics depend on vector databases for speed. This demand is why Gen Ai Course in Bangalore places heavy focus on vector indexing and search tuning rather than surface-level AI features.

Scaling Memory Without Slowing AI:

As data grows, search speed must stay stable. Vector databases are designed for scale.

They use special search methods:

- Approximate nearest neighbor search

- Graph-based indexing

- Data sharding across nodes

- Parallel query handling

These systems accept small approximations to gain large speed improvements. This trade-off is safe because AI does not need perfect matches. It needs relevant ones.

Unlike traditional databases, vector systems are built for distributed use. They grow horizontally. Performance stays predictable.

This approach is critical in enterprise systems where millions of vectors must be searched in milliseconds.

Vector Databases vs Traditional Databases:

| Area | Traditional Database | Vector Database |

| Data format | Tables and rows | Numerical vectors |

| Search type | Exact match | Similarity search |

| Context handling | Weak | Strong |

| AI support | Limited | Native |

| Scalability | Rigid | Flexible |

This difference explains why vector databases are now core AI infrastructure.

Sum up:

Vector databases give AI systems a working memory that behaves closer to human recall. They replace keyword search with meaning-based retrieval. This allows AI to stay accurate, scalable, and adaptable. The technical value lies in how memory is indexed, searched, and reused. As AI moves deeper into enterprise systems, memory design becomes more important than model size. Teams that understand vector databases build AI that responds with context, adapts to change, and avoids guessing. This is why vector databases are no longer an advanced add-on. They are the backbone of modern AI memory systems.