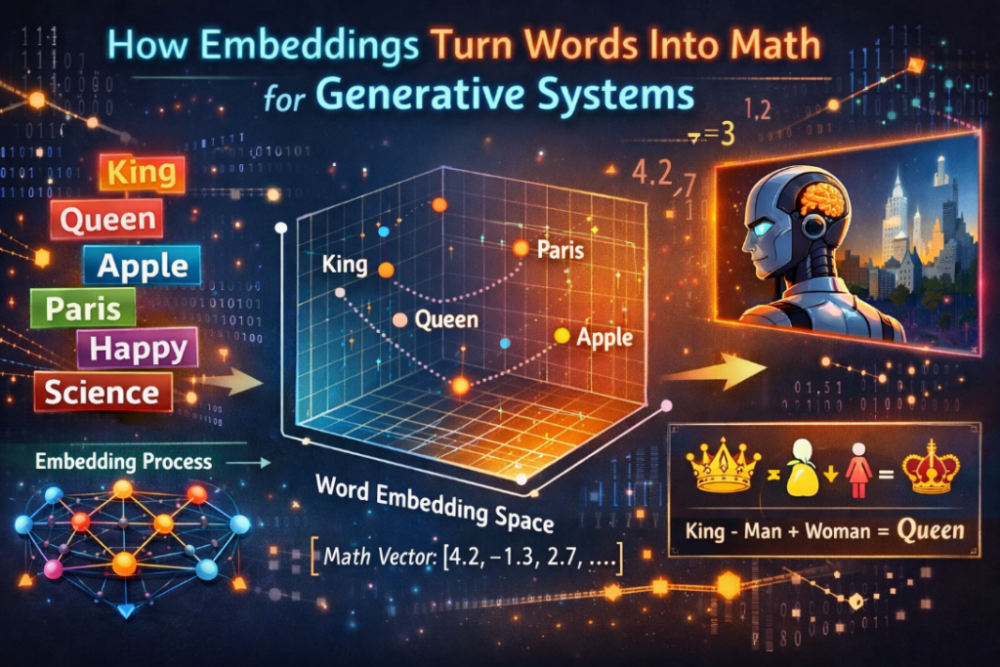

Language is not natural to machines. A system cannot read words or understand meaning like humans do. It can only work with numbers. To handle text, words must be changed into numbers in a smart way. This is where embeddings are used. Embeddings convert text into math that systems can store, compare, and process.

Embeddings are the base layer of generative systems. They help systems understand how words relate to each other. They decide which text is close in meaning and which text is not. Without embeddings, text processing would be slow, shallow, and inaccurate. Anyone enrolling in an Agentic AI Online Course today is introduced to embeddings early, not as theory, but as the mathematical backbone of decision-making systems.

What Embeddings Are in Simple Technical Terms?

An embedding is a list of numbers. This list is called a vector. Each vector represents text. The text can be a word, a sentence, or a full document. The numbers are not chosen by hand. They are learned during training.

Each number in the vector holds a signal. One signal may relate to the topic. Another may relate to usage. Another may relate to grammar or meaning. All numbers together represent the overall sense of the text.

Most embeddings have hundreds of numbers. Some have more than a thousand. More numbers allow more detail. But more numbers also mean more storage and slower processing.

The most important idea is distance.

- Vectors close together mean similar meaning

- Vectors far apart mean different meaning

The system does not read text. It compares numbers. Meaning is found using math formulas.

How Meaning Is Learned During Training?

Embeddings are learned from large amounts of text. The system studies how words appear together. Words that appear in similar places get similar vectors.

Training does not focus on dictionary meaning. It focuses on usage. This is why embeddings work well even when words change meaning based on context.

During training, the system sees many text pairs.

- Related text is pulled closer

- Unrelated text is pushed apart

This shapes the vector space. Over time, clear patterns form. Topics group together. Writing styles group together. Technical terms sit near each other.

Good training data matters a lot. Poor data creates weak embeddings. Balanced data creates strong embeddings. Learners pursuing a Generative AI Course in Delhi are increasingly exposed to this practical reality.

Why Embeddings Are Used Before Any Text Output?

When a user gives input, the system does not respond immediately. First, the input is converted into an embedding. This step always comes first.

That embedding is then compared with stored embeddings.

- Stored documents

- Stored notes

- Stored knowledge

- Stored past interactions

The system finds the closest matches using vector search. Only the most relevant data is selected. Then text is generated using that selected data.

This process improves accuracy. It reduces wrong answers. It keeps responses tied to real information.

This is why embeddings are critical in modern generative workflows.

Embeddings Used for Memory and Decision Flow

Advanced systems use embeddings as memory. Every interaction can be saved as a vector. This allows long-term recall.

Memory works through similarity.

- Current state is converted into a vector

- Past vectors are searched

- Closest matches are treated as useful memory

How Embeddings Are Stored and Searched?

Storing embeddings is not simple. Normal databases are not designed for this type of search. Vector databases are used instead. These systems focus on fast similarity search. They do not check every vector. They use smart indexing methods.

Main goals of vector storage:

- Fast search

- Acceptable accuracy

- Low memory use

Here is a simple table showing common embedding uses:

| Use Area | Vector Size | Main Purpose |

| Word meaning | 300–768 | Language structure |

| Sentence meaning | 384–1024 | Search and matching |

| Document meaning | 768–1536 | Knowledge lookup |

| Memory storage | 512–1024 | Recall and planning |

Engineers must tune these systems. A wrong setup can slow everything down.

Design Choices That Affect Embedding Quality

Several choices affect how well embeddings work.

Key factors include:

- Vector size

- Training data quality

- Update frequency

- Search method

Security is another concern. Embeddings may contain hidden data patterns. Sensitive content must be handled carefully. Students enrolled in a Masters in Generative AI Course often work on such systems as capstone projects, learning how embedding drift, storage limits, and retrieval noise affect downstream reasoning.

Sum up,

Embeddings are the core layer of generative systems. They allow machines to work with language using numbers instead of rules. Every search, memory lookup, and response depends on them. While users never see embeddings, they shape every result. Understanding how embeddings work gives deep insight into how modern language systems operate.