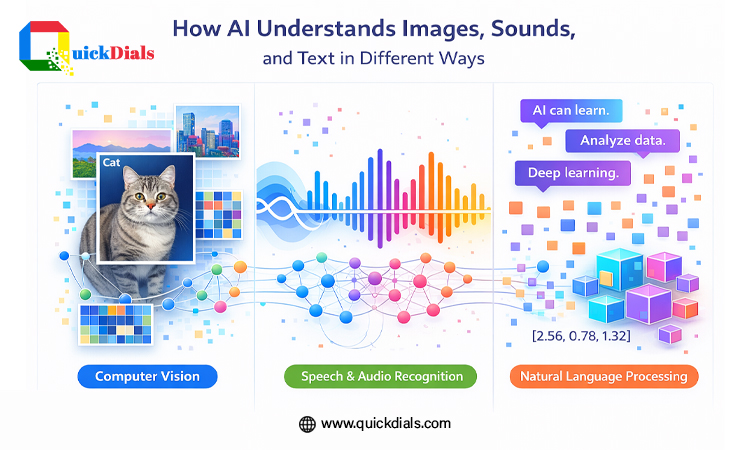

Machines deal with numbers. Everything input here is changed into numbers. Pictures, audio, and words may come from different input sources. However, the inside working of each of them could not be more different. Machines, therefore, learn all of them in a different manner. Each of them has its own set of technical rules. This difference was highlighted clearly in the advanced paths of learning an artificial intelligence course in Noida, which focus on the data shape deciding the model.

Understanding such differences is critical for developing optimal systems. This is why there are systems which perform well with text inputs but poorly with audio inputs, or models of images that malfunction with a change in lighting.

How Image Data Is Understood?

Images are made of pixels. Each pixel holds a number that represents color or brightness. Together, pixels form a grid. Machines read this grid exactly as it is, without knowing what the image shows.

Important technical points about image data:

- Pixel position matters

- Nearby pixels are related

- Shapes matter more than exact color

- Small shifts should not change meaning

To handle this, machines use models that scan the image in small parts. These scans detect patterns. Early stages find lines and edges. Later stages detect larger shapes.

Common problems with image data:

- Objects may be partly hidden

- Lighting changes affect values

- Objects appear at different sizes

- Background noise interferes

Machines do not know objects. They only match learned patterns. When patterns change too much, accuracy drops.

How Sound Data Is Understood?

Sound is not fixed. It changes every moment. Machines treat sound as a flowing signal, not as a picture.

Basic facts about sound data:

- It is a long list of values

- Order matters

- Timing changes meaning

- Noise is always present

Sound is usually converted into a time-based format that shows pitch and strength over time. This makes learning possible.

Sound models focus on sequence. What comes before affects what comes after.

Major challenges with sound data:

- Background noise mixes with signal

- Speaking speed varies

- Tone and pitch differ

- Devices affect sound quality

Because of this, sound systems fail easily when conditions change.

How Text Data Is Understood?

Text is symbolic. Words do not carry meaning by themselves for machines. Meaning is learned from patterns.

How text is processed:

- Words are split into units

- Units are converted into numbers

- Numbers are placed in order

Meaning comes from context. The same word can mean different things based on nearby words.

Key points about text data:

- Word order is critical

- Context controls meaning

- Small changes have big effects

- Language is full of exceptions

Text systems predict what is likely next. They do not understand facts. This is why outputs can sound correct but still be wrong.

Training programs like an AI course in Gurgaon now focus more on context handling and domain-specific text learning, because general text models fail when patterns change.

Why One Method Cannot Handle All Data?

Images, sounds, and text behave differently at a mathematical level. This makes shared learning difficult.

Simple comparison:

| Data Type | Structure | Main Focus | Main Problem |

| Images | Pixel grid | Space and shape | Scale and lighting |

| Sound | Time signal | Order and timing | Noise |

| Text | Symbol sequence | Context | Ambiguity |

Using one model for all leads to poor results. Modern systems avoid this.

Advanced programs such as an AI course in Delhi now explain why separate processing paths are required before any data can be combined.

How Different Data Types Are Combined?

Modern systems process each input separately first.

Steps used:

- Images go through spatial models

- Sounds go through sequence models

- Text goes through context models

After this, each output is converted into a similar numeric form. Only these learned features are combined.

Important points:

- Raw data is never mixed

- Balance between inputs is critical

- Errors are harder to trace

- One input can dominate others

This is a major challenge in real-world systems.

Key Points to Remember

- All data becomes numbers

- Images depend on space

- Sound depends on time

- Text depends on order

- Each type needs its own model

- Combining data increases risk

Sum up,

Machines understand images, sounds, and text through separate technical paths. These paths exist because each data type behaves differently at a structural level. Image systems rely on spatial patterns. Sound systems rely on time-based signals. Text systems rely on context and sequence. Strong systems respect these differences instead of forcing one method on all data.